Tableau Data Management

This content is part of Tableau Blueprint—a maturity framework allowing you to zoom in and improve how your organization uses data to drive impact. To begin your journey, take our assessment(Link opens in a new window).

Tableau Data Management helps you better manage the data within your analytics environment, ensuring that trusted and up-to-date data is always used to drive decisions. From data preparation to cataloging, search, and governance, Tableau Data Management will increase trust in your data, accelerating the adoption of self-service analytics. The offering is a separately licensed collection of features and functionality including Tableau Prep Conductor and Tableau Catalog, which manage Tableau content and data assets in Tableau Server and Tableau Cloud.

What is Tableau Data Management?

Overall, your organization will benefit from data governance and data source management approaches discussed elsewhere in Tableau Blueprint. Beyond these methodologies, you will often hear generic references to the term Data Management in the database, data analytics, and visualization communities. However, this term gets more specific when it comes to Tableau with Tableau Data Management, a set of capabilities for use with Tableau Server and Tableau Cloud. Regardless of whether you’re using Tableau Server for Windows or Linux, or Tableau Cloud, the features of Tableau Data Management are mostly identical (a small subset of features may only be available in Tableau Cloud or in Tableau Server).

Tableau Data Management encompasses a set of tools that help your organization’s data stewards and analysts manage data-related content and assets in your Tableau environment. Specifically, three additional feature sets are added when you purchase Tableau Data Management:

-

Tableau Catalog

-

Tableau Prep Conductor

-

Virtual Connections with Data Policies

Tableau Catalog

The original feature of Tableau Data Management, Tableau Catalog provides features to help streamline access, understanding, and trust of Tableau data sources. Focusing on areas such as lineage, data quality, search, and impact analysis, Tableau Catalog can make it easier for data stewards and data visualizers/analysts to understand and trust data sources in Tableau Server and Cloud. Tableau Catalog includes additional features for Tableau developers via metadata methods in the Tableau REST API.

When Tableau Catalog is initially enabled, it scans all related content items in your Tableau Server or Cloud site to build a connected view of all related objects (Tableau Catalog refers to this as content metadata). This expands search capabilities beyond just data connections. Data stewards and visual authors may search based on columns, databases, and tables as well.

To reduce the possibility of inadvertently modifying or deleting an object that another object depends on (for example, renaming or removing a database column that is key to a production workbook) Tableau Catalog's lineage feature exposes interrelationships among all content on a Tableau site including metrics, flows, virtual connections. You may now easily see the relationships amongst objects and analyze the impact of a pending change before you make it.

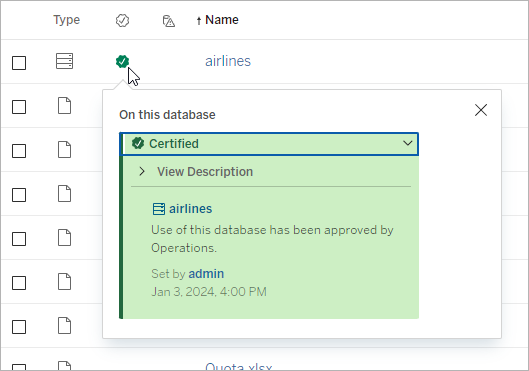

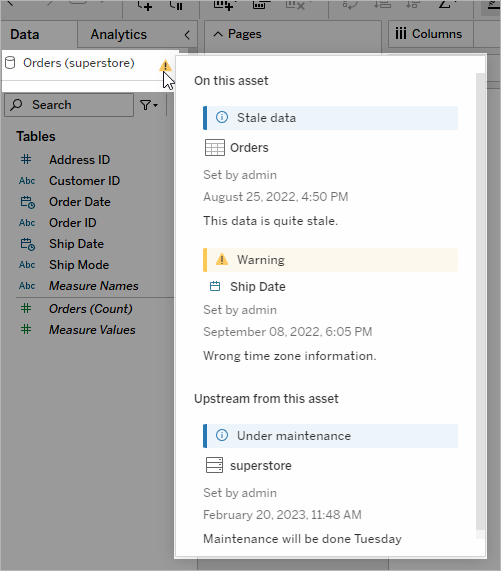

To improve trust in your Tableau data sources, Tableau Catalog provides supplementary information, such as expanded data-related object descriptions, the Data Details view, and keyword tags for enhanced searching flexibility. Certifying data sources places a prominent icon next to data sources to signify a data source owner or administrator’s trust of the data source. Data items (data sources, columns, and so forth) that may be cause of concern to consumers, such as deprecated or stale data, may be designated with data quality warnings. In addition to a data quality warning option, sensitive data may be specifically flagged with Sensitivity Labels.

Tableau Prep Conductor

If you’re like many Tableau customers, you’ve discovered the benefits of Tableau Prep Builder to create sophisticated data preparation “flows” that combine multiple data sources, shape data, customize columns, and output to one or more desired data formats. But once you create the perfect Prep flow, how do you automate it to run and fully or incrementally update data sources on a schedule?

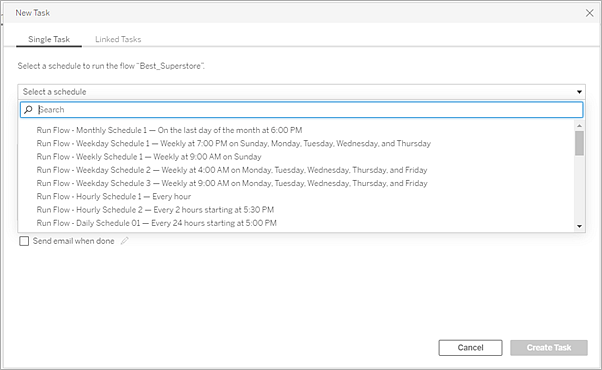

This is where Tableau Prep Conductor, another feature of Data Management, comes into play. Tableau Prep Conductor allows flexible scheduling of Tableau Prep Flows, regardless of whether they are published to your Tableau Server or Tableau Cloud environment from Tableau Prep Builder or created directly in a browser with Prep Flow Web Authoring. Begin by testing your web-based flow (you may run flows manually on-demand without Data Management, but will need to purchase Data Management to schedule flows to run automatically with Prep Conductor). The flow should run to completion and create your desired output data source without errors before you schedule.

If you are using Tableau Server, your administrator (or you, if you have proper privileges) can create custom schedules (such as “Daily at midnight,” “Sunday at noon,” and so forth) to run prep flows, much as you may have done for extract refreshes.

If you are using Tableau Cloud, a set of predefined prep flow schedules are installed by default. You cannot customize these or create your own prep flow schedules.

Schedule flows to run from the Actions menu. A Single Task schedule will run only the selected prep flow on the schedule you select. A Linked Task schedule will permit you to select one or more additional flows to run in sequence with the selected flow, should you wish to “chain” multiple flows to run in a specific order (perhaps to create one data source output to be used as an input data source for a subsequent flow). The flows will now run when scheduled, automatically updating or creating data sources that Tableau Workbooks may be based on.

In addition to the ability to schedule flows, Data Management and Tableau Prep Conductor add options to monitor scheduled flow successes/failures, send email notifications when flow schedules succeed or fail, programmatically run flows with the Tableau Server/Cloud REST API, and benefit from additional Administrative View capabilities to monitor flow performance history.

BEST PRACTICE RECOMMENDATION: If you are planning on running a large number of Tableau Prep Conductor flows on Tableau Server, you may need to adjust scaling of your server environment. If necessary, tune performance of your Tableau Server system by adding additional nodes or backgrounder processes to accommodate required prep flow load.

What about Tableau Cloud? While you won’t be required to consider architectural changes to Tableau Cloud for prep flow capacity, you are required to acquire one Resource Block (a unit of Tableau Cloud computing capacity) for each concurrent Tableau Prep Conductor flow you wish to schedule. Determine how many concurrent flow schedules you require and purchase Tableau Cloud resource blocks accordingly.

Virtual Connections

Onto our next Data Management feature - Virtual Connections. A virtual connection provides a central access point to data. It can access multiple tables across several databases. Virtual connections let you manage extracting the data and the security in one place, at the connection level.

When are virtual connections useful?

If you consider a traditional way of sharing a database connection with multiple workbooks in Tableau, you’ll probably think of connecting directly to a database server like SQL Server or Snowflake, supplying database login credentials, adding and joining one or more tables, and then publishing the data source to Tableau Server or Tableau Cloud. While you may choose to use this as a live connection to data, it’s very possible you want to extract data from the data source to speed up connected workbooks.

For the sake of discussion, let’s consider that you may do this any number of times to accommodate, for example, a different set of tables or joins, resulting in multiple published (and, perhaps, extracted) data sources used for a series of workbooks that have different table/join requirements, but that all use the same initial database.

Now, let’s consider what happens if something about the initial SQL Server or Snowflake database that’s referenced in that series of data sources changes–perhaps tables are renamed, additional fields are added, or database credentials are changed. You are now faced with the task of opening each of the previously-created data sources, making necessary changes to accommodate the database change, and republishing (and, perhaps, rescheduling extract refreshes).

You might find it far simpler to create only one initial data connection “definition” which stores database server name, credentials, and table references. And, you might prefer to extract data from that larger “definition.” Then, when you need to create different data sources for various combinations of tables, joins, and so forth, you may reference that initial “definition” rather than connecting directly to one or more database servers. If something in the core database structure changes (for example, table names change or credentials are modified), you only need modify the initial “definition” object and all the dependent data sources automatically inherit the changes.

The Data Management feature introduces this shared “definition” capability via a virtual connection. A virtual connection is similar to a standard data source connection in that it stores the database server, login credentials, and selected tables. And, like a traditional Tableau data source, a virtual connection can contain connections to more than one database/data source (each with its own set of credentials and tables). While some metadata modifications are allowed in a virtual connection (for example, hiding or renaming fields), tables are not joined within the virtual connection. When you eventually use the virtual connection as a direct source for a workbook or as a connection type for an additional published data source, you may join tables and perform further customizations to the data source.

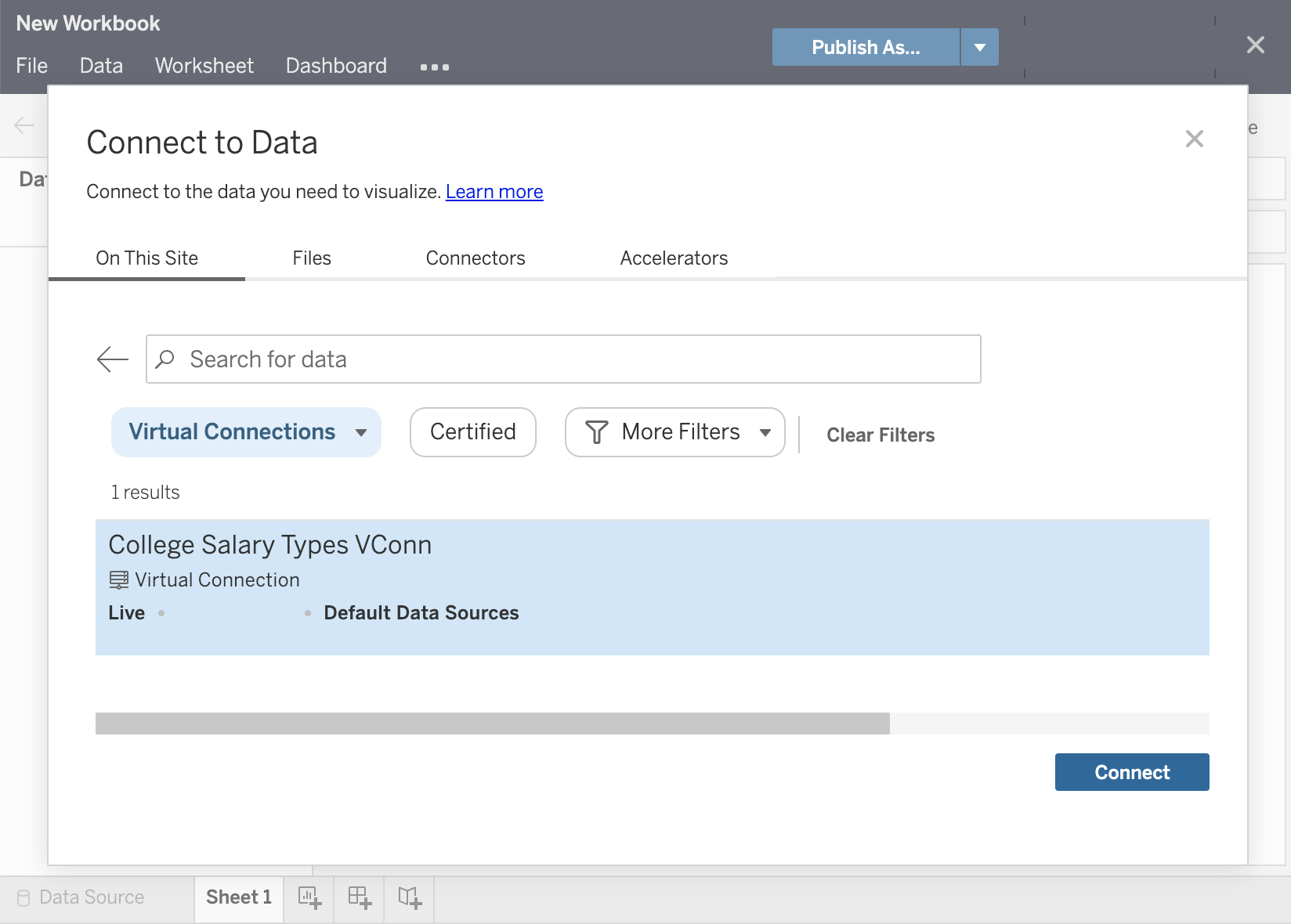

Once a virtual connection has been created and published to Tableau Server or Tableau Cloud and proper permissions have been set, you may connect to the virtual connection in Tableau Desktop or Tableau Server/Cloud just like you would any other data source. However, you won’t need to specify a database server location or supply credentials, and you’ll immediately be able to join tables and proceed to visualize data or publish the data source.

Data Policies

In addition to the centralized database connection features described earlier, Tableau Data Management virtual connections also provide a more streamlined centralized Row Level Security option with Data Policies. Use a data policy to apply row-level security to one or more tables in a virtual connection. A data policy filters the data, ensuring that users see only the data they're supposed to see. Data policies apply to both live and extract connections.

When are data policies useful?

It’s common in many organizations to automatically restrict data visible in a visualization to only what is applicable to the current user. For example, consider a shared dashboard that contains order details in a cross tab object.

-

If you are a Sales Manager for a large territory, the details cross tab will show orders for every account executive in your territory.

-

However, if you're an individual account executive, the details cross tab will only show orders for your accounts.

This scenario requires that Row Level Security be implemented in your Tableau environment, which can be accomplished with one of several methods, including:

-

Row level security in the database. Every time a visualization is viewed, the viewer is prompted to log into the underlying database with their own credentials or their credentials are inherited from their Tableau user account. The resulting data set is restricted to only data they are permitted to see based on the credentials supplied. Not only can this rapidly become tedious with each viewer being required to maintain their own credentials, but the live data connection may impact performance by placing a large burden on the underlying database. Furthermore, some options for passing on credentials to live connections may be restricted with Tableau Cloud.

-

Tableau User Filters. User filters are applied when creating individual worksheets within a workbook. By specifying combinations of either individual Tableau user credentials, or membership in one or more Tableau user groups, individual worksheets can be filtered to only show data relevant to that user. This may become tedious, as each individual worksheet within a workbook requires user filters to be supplied – there is no way of specifying a user filter over a large group of workbooks with one process. Additionally, if a user is inadvertently given edit permissions to the workbook, they may easily drag the user filter off the filter shelf and see all underlying data that they may not have permission to view.

By making use of a subset of the Tableau calculation language, data policies can specify sophisticated rules (perhaps by use of a related “entitlement table” in a database) to customize and limit data that the virtual connection returns, based on user ID or group membership. Not only does this maintain Row Level Security at the data source level (all workbooks connected to the data source will automatically inherit the security and adopt any changes made within the virtual connection), it adds an additional layer of security by restricting any modification of data policies to only those with edit permissions for the original virtual connection.