Data Strategy

This content is part of Tableau Blueprint—a maturity framework allowing you to zoom in and improve how your organization uses data to drive impact. To begin your journey, take our assessment(Link opens in a new window).

Every organization has different requirements and solutions for its data infrastructure. Tableau respects an organization’s choice and integrates with your existing data strategy. In addition to the enterprise data warehouse, there are many new sources of data appearing inside and outside of your organization: cloud applications and data, big data databases, structured and unstructured Repositories. From Hadoop clusters to NoSQL databases, and many others, the data flow no longer needs to be centralized around the enterprise data warehouse (EDW) as a final destination.

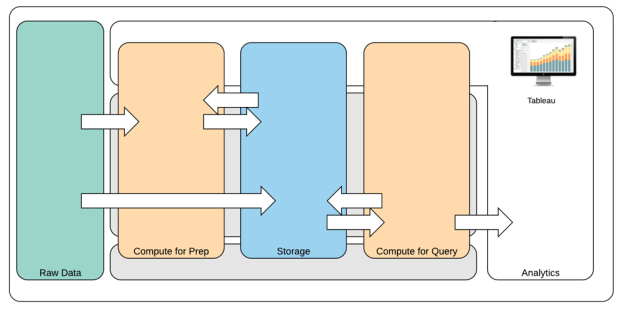

Modern data architecture is driven by new business requirements (speed, agility, volume) and new technology. You choose whether to provide access to the data in place or enrich data with other sources. Combine this with cloud solutions that allow for infrastructure and services to spin up data pipelines in hours, and what you have is a process for moving data around an organization like never before. Unfortunately, the new opportunity is largely missed if your organization’s data management handbook was written using a traditional EDW’s single bucket of data mindset. The trick to shifting from buckets to pipelines is accepting that not all data questions within an organization can be answered from any one data source. The pattern for a modern data architecture is shown below.

Modern Data Architecture

- Raw Data: sources of data, for example, transactional data that is loaded into the data platform that often needs transforming in several ways: cleansing, inspection for PII, etc.

- Compute for Prep: the processing of the raw data can require significant computational resources, so it's more than traditional ETL. Often Data Science apps will sit here. They actually can create new data with high value.

- Storage: Modern data platforms are built on a principle of storing data because you never know how it might be used in the future. Increasingly, we store intermediate data and multiple versions and forms of the same data. Storage is therefore layered.

- Compute for Query: the typical analytic database engine, including Hyper extracts, but also Hadoop, etc.

- Analytics: Tableau sits in Analytics.

Tableau’s Hybrid Data Architecture

Tableau’s hybrid data architecture provides two modes for interacting with data, using a live connection or an in-memory extract. Switching between the two is as easy as selecting the right option for your use case.

Live Connection

Tableau’s data connectors leverage your existing data infrastructure by sending dynamic queries directly to the source database rather than importing all the data. This means that if you’ve invested in fast, analytics-optimized databases, you can gain the benefits of that investment by connecting live to your data. This leaves the detail data in the source system and sends the aggregate results of queries to Tableau. Additionally, this means that Tableau can effectively utilize unlimited amounts of data. In fact, Tableau is the front-end analytics client to many of the largest databases in the world. Tableau has optimized each connector to take advantage of the unique characteristics of each data source.

In-Memory Extract

If you have a data architecture built on transactional databases or want to reduce the workload of the core data infrastructure, Tableau’s Data Engine powered by Hyper technology provides an in-memory data store that is optimized for analytics. You can connect and extract your data to bring it in-memory to perform queries in Tableau with one click. Using Tableau Data Extracts can greatly improve the user experience by reducing the time it takes to re-query the database. In turn, extracts free up the database server from redundant query traffic.

Extracts are a great solution for highly-active transactional systems that cannot afford the resources for frequent queries. The extract can be refreshed nightly and available to users during the day. Extracts can also be subsets of data based on a fixed number of records, a percentage of total records, or filtered criteria. The Data Engine can even do incremental extracts that update existing extracts with new data. Extracts are not intended to replace your database, so right-size the extract to the analysis at hand.

If you need to share your workbooks with users who do not have direct access to the underlying data sources, you can leverage Extracts. Tableau’s packaged workbooks (.twbx file type) contain all the analysis and data that was used for the workbook; making it both portable and shareable with other Tableau users.

If a user publishes a workbook using an extract, that extract is also published to the Tableau Server or Tableau Cloud. Future interaction with the workbook will use the extract instead of requesting live data. If enabled, the workbook can be set to request an automatic refresh of the extract on a schedule.

Query Federation

When related data is stored in tables across different databases or files, you can use a cross-database join to combine the tables. To create a cross-database join, you create a multi-connection Tableau data source by adding and then connecting to each of the different databases (including Excel and text files) before you join the tables. Cross-database joins can be used with live connections or in-memory extracts.

Data Server

Included with Tableau Server and Tableau Cloud, Data Server provides sharing and centralized management of extracts and shared proxy database connections, allowing for governed, measured, and managed data sources available to all users of Tableau Server or Tableau Cloud without duplicating extracts or data connections across workbooks.

Because multiple workbooks can connect to one data source, you can minimize the proliferation of embedded data sources and save on storage space and processing time. When someone downloads a workbook that connects to a Published Data Source that in turn has an extract connection, the extract stays in Tableau Server or Tableau Cloud, reducing network traffic. Finally, if a connection requires a database driver, you need to install and maintain the driver only on the Tableau Server, instead of on each user’s computer. Similarly for Tableau Cloud, database drivers are managed by Tableau for supported sources of data.

Using the initial data use cases collected from each team, a DBA and/or Data Steward will publish a certified data source for each source of data identified for users with the appropriate permissions to access it. Users can connect directly to a Published Data Source from Tableau Desktop and Tableau Server or Tableau Cloud.

Published Data Sources prevent the proliferation of data silos and untrusted data for both extract and live connections. Extract refreshes can be scheduled, and users across the organization will stay up to date with the same shared data and definitions. A Published Data Source can be configured to connect directly to live data with a proxy database connection. This means your organization has a way to centrally manage data connections, join logic, meta data and calculated fields

At the same time, to enable self-service and flexibility, users can extend the data model by blending in new data or creating new calculations and allow the newly defined data model to be delivered to production in an agile manner. The centrally managed data will not change, but users retain flexibility.

Certified Data Sources

Database administrators and/or Data Stewards should certify Published Data Sources to indicate to users that the data is trusted. Certified data sources appear with a unique certification badge in both Tableau Server, Tableau Cloud, and Tableau Desktop. Certification notes allow you to describe why a particular data source can be trusted. These notes are accessible throughout Tableau when viewing this data source as well as who certified it. Certified data sources receive preferential treatment in search results and stand out in data source lists in Tableau Server, Tableau Cloud, and Tableau Desktop. Project leaders, Tableau Cloud Site Administrators, and Tableau Server/Site Administrators have permission to certify data sources. For more information, visit Certified Data Sources.

Data Security

Data security is of utmost importance in every enterprise. Tableau allows customers to build upon their existing data security implementations. IT administrators have the flexibility to implement security within the database with database authentication, within Tableau with permissions, or a hybrid approach of both. Security will be enforced regardless of whether users are accessing the data from published views on the web, on mobile devices, or through Tableau Desktop and Tableau Prep Builder. Customers often favor the hybrid approach for its flexibility to handle different kinds of use cases. Start by establishing a data security classification to define the different types of data and levels of sensitivity that exist in your organization.

When leveraging database security, it is important to note that the method chosen for authentication to the database is key. This level of authentication is separate from the Tableau Server or Tableau Cloud authentication (i.e. when a user logs into Tableau Server or Tableau Cloud, he or she is not yet logging into the database). This means that Tableau Server and Tableau Cloud users will also need to have credentials (their own username/password or service account username/password) to connect to the database for the database-level security to apply. To further protect your data, Tableau only needs read-access credentials to the database, which prevents publishers from accidentally changing the underlying data. Alternatively, in some cases, it is useful to give the database user permission to create temporary tables. This can have both performance and security advantages because the temporary data is stored in the database rather than in Tableau. For Tableau Cloud, you need to embed credentials to use automatic refreshes in the connection information for the data source. For Google and Salesforce.com data sources, you can embed credentials in the form of OAuth 2.0 access tokens.

Extract encryption at rest is a data security feature that allows you to encrypt .hyper extracts while they are stored on Tableau Server. Tableau Server administrators can enforce encryption of all extracts on their site or allow users to specify to encrypt all extracts associated with particular published workbooks or data sources. For more information, see Extract Encryption at Rest.

If your organization is deploying Data Extract Encryption at Rest, then you may optionally configure Tableau Server to use AWS as the KMS for extract encryption. To enable AWS KMS or Azure KMS, you must deploy Tableau Server in AWS or Azure, respectively, and be licensed for Advanced Management for Tableau Server. In the AWS scenario, Tableau Server uses the AWS KMS customer master key (CMK) to generate an AWS data key. Tableau Server uses the AWS data key as the root master key for all encrypted extracts. In the Azure scenario, Tableau Server uses the Azure Key Vault to encrypt the root master key (RMK) for all encrypted extracts. However, even when configured for AWS KMS or Azure KMS integration, the native Java keystore and local KMS are still used for secure storage of secrets on Tableau Server. The AWS KMS or Azure KMS is only used to encrypt the root master key for encrypted extracts. For more information, see Key Management System.

For Tableau Cloud, all data is encrypted at rest by default. Although with Advanced Management for Tableau Cloud, you can take more control over key rotation and auditing by leveraging Customer-Managed Encryption Keys. Customer-Managed Encryption Keys give you an extra level of security by allowing you to encrypt your site’s data extracts with a customer managed site-specific key. The Salesforce Key Management System (KMS) instance stores the default site-specific encryption key for anyone who enables encryption on a site. The encryption process follows a key hierarchy. First, Tableau Cloud encrypts an extract. Next, Tableau Cloud KMS checks its key caches for a suitable data key. If a key isn’t found, one is generated by the KMS GenerateDataKey API, using the permission granted by the key policy that's associated with the key. AWS KMS uses the CMK to generate a data key and returns a plaintext copy and encrypted copy to Tableau Cloud. Tableau Cloud uses the plaintext copy of the data key to encrypt the data and stores the encrypted copy of the key along with the encrypted data.

You can limit which users see what data by setting user filters on data sources in both Tableau Server and Tableau Cloud. This allows you to better control what data users see in a published view based on their Tableau Server login account. Using this technique, a regional manager is able to view data for her region but not the data for the other regional managers. With these data security approaches, you can publish a single view or dashboard in a way that provides secure, personalized data and analysis to a wide range of users on Tableau Cloud or Tableau Server. For more information, see Data Security and Restrict Access at the Data Row Level. If row-level security is paramount to your analytics use case, with Tableau Data Management, you can leverage virtual connections with data policies to implement user filtering at scale. For more information, see Virtual Connections and Data Policies.